Most of the material in this blog post is drawn from an email interview of me by my old pal John Shirley, for the terrific new ezine Instant Future , run by Brock Hinzmann and John.

As is my usual fashion, for my blog-post version I added some images that may seem to have no connection with the interview material. But always remember the fundamental principle of Surrealism: everything goes with everything.

John: Rudy, has anything you predicted, in your science-fiction or in non-fiction, come true?

Rudy: I’ve always wanted someone to ask me about my predictions coming true. I’ll mention two big ones.

A first prediction of mine is that the only way to produce really powerful AI programs to use evolution. Because it’s literally impossible to write them from the ground up. I got this insight from the incomparable Kurt Gödel himself. The formal impossibility of writing the code for a human-equivalent mind is a result that drops out of Gödel’s celebrated Incompleteness Theorem of 1931. It turns out that creating a mind isn’t really about logic at all. It’s about tangles.

I worked out the details of robot evolution while I was on a mathematics research grant at the University of Heidelberg in 1979. My method of research? I wrote my proto-cyberpunk novel Software. You might call it a thought experiment gone rogue. I had a race of robots living on the Moon and reproducing by building new robots and copying variants of their software onto the newborns. The eventual software tangles are impossible to analyze. But they stoke a glow of consciousness. Like the neuron tangles in our skulls.

By 1993, when I wrote The Hacker and the Ants, I understood that robot evolution should be run at very high speeds, using simulated bots in virtual world. And I tweaked the evolutionary process so that the fitness tests are co-evolving with the sims; that is, the tests get harder and harder. What were the tests? Increasingly vicious suburban homes inhabited by nasty sims called Perky Pat and Ken Doll.

My second big prediction is what I call a lifebox. This also appears in Software, and it’s a main theme in my nonfiction book, The Lifebox, the Seashell, and the Soul. The idea is that we ought to be able to make a fairly convincing emulation version of a person. The key is to have a very large data base on the person. If the person is a writer, or if they post a lot of messages and email, you have a good leg up on amassing the data. And in our new age of all-pervasive surveillance, it’ll be easy to access untold hours of a non-writing person’s conversation for the lifebox data.

Once you have a rich data base, creating a convincing lifebox isn’t especially difficult, and we’re almost there. On the back end you have a the data, thoroughly linked and indexed. On the front end you have a chatbot that assembles its answers from searches run on the data. And you want to have some custom AI that gives the answers a seamless quality, and which remembers the previous conversations that the user has had with the lifebox. This is pretty much what ChatGPT is starting to do.

By now the lifebox is such a staple of SF films and novels that people think the notion of a lifebox has always been around. It wasn’t. Someone had to dream it up. And that was me, with Software. It wasn’t at all easy to come up with the idea. It looks simple and obvious now, but in the Seventies it wasn’t.

One of the killer lifebox apps will be to help the bereaved to talk with their dead relatives, as described in my Saucer Wisdom.

As a crude experiment I myself set up an online Rudy’s Lifebox that you can converse with…in the weak sense of getting Rudy-text responses to whatever you type in. The Rudy’s Lifebox simply does a search on my rather large website…but I haven’t done any of the ChatGPT-style work needed for a chatbot front end.

I keep hoping that Stephen Wolfram will do that part for me, but I doubt if he will…at least not before he implements the Stephen’s Lifebox.

I know Hallmark Cards is researching this app, and a couple of years ago, Microsoft took the trouble of patenting my lifebox notion. Somehow they forgot to offer me a senior consultant job.

John: Any highlights in your mind for what might be coming in the next fifty years? Perhaps a big, defining trend you expect?

Rudy: I’d like to see some new physics. We’ve only been doing what we call “real science” for two centuries. Obviously there’s still a lot to learn. I can’t help thinking that some heavy SF-made-real moves might still resolve the terrible energy vs. climate problem. And maybe the horrible gun problem as well. I actually have some ideas for SF stories about this. I’m thinking about UFO-like “smart magnets” that sweep across the skies, sucking up the weapons like a real electromagnet above a car crusher place.

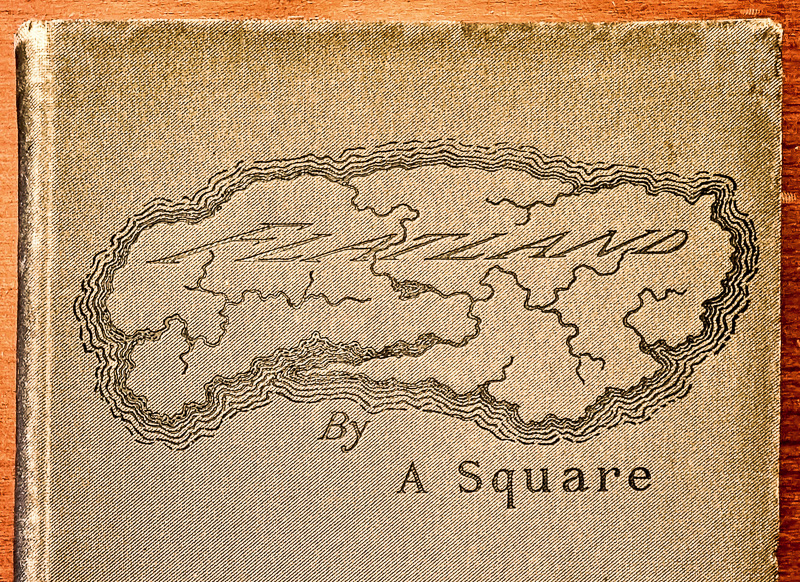

In terms of new wonders, I’m very fond of the subdimensions, that is, the scales below the Planck length. I’s like to get rid of the stingy size-scale limitations prescribed by quantum mechanics. And on another front, it would be great to bring Cantor’s transfinite cardinals into physics; I wrote about this in the latest intro to my nonfiction classic, Infinity and the Mind.

Recognizing the ubiquity of consciousness is yet another trend I see as important. Not just in a dreamy way, but in a lurid, literal sense. Stephen Wolfram has pretty well established that natural phenomena have the same computational complexity as human minds. Now we just need to learn how to talk to rocks. I wrote a novel about this, called Hylozoic.

By the way, “Hylozoism” is an actual dictionary word meaning “the doctrine that all matter is alive.” You don’t even need to make this stuff up. It’s all out there, ready for the taking.

John: Do you believe “the technological singularity” will come true, this century? Or has it crept up on us already?

Rudy: That’s a complicated question. Some say the singularity happened when we got the web working. Others say it’s more like daybreak—not a sudden flash, but a gradual dawning of the light. Or, yet again, if you see the Cosmos as having a mind, then a maximal, singular intelligence has been in place since the dawn of time. No big deal. It’s what is.

The SF writer and computer scientist Vernor Vinge brought the technological singularity into discussion in the early 1990s. His idea was tidy: as our AI programs get smarter, they’ll design still smarter AIs, and we’ll get an exponential explosion of simulated minds. A self-building tower of Babel. But it probably won’t happen like that. If our new AIs are smart, they might not want to design better AIs. After all, there’s more to life than intelligence.

Around 2000, Ray Kurzweil wrote a few books popularizing the singularity, and I was envious of his success. I wrote my novel Postsingular as a kind of rebuke—a reaction to the rampant singularity buzz.

The singularity isn’t the end SF. I say let it come down, and then write about what happens next. Charles Stross and I both take this approach. We’ll still be humans, living our lives, and we’ll still be as venal and lusty as the characters in Peter Bruegel paintings. Laughing and crying and eating and having sex—and artificial intelligence isn’t going to change any of that.

John: Is fear of the new AI revolution misplaced or valid?

Rudy: Fear of what? That there will be hoaxes and scams on the web? Hello? Fear that bots will start doing people’s jobs? Tricky. If a bot can do part of your job, then let the bot do that, and that’s probably the part of the job that you don’t enjoy. You’ll do the other part. What’s the other part? Talking to people. Relating. Being human. The clerk gets paid for hanging around the with the customers. Gets paid for being a host.

I’m indeed fascinated by the rapid progress of the ChatGPT-type wares. In his analysis of ChatGPT Stephen Wolfram breaks it all down for us. And, being Wolfram, he has the genius to run his flow of ideas on for about five times as long as a normal person would be able to. He seems to suggest that “intelligent thought” might be a very common process which complex systems naturally do.

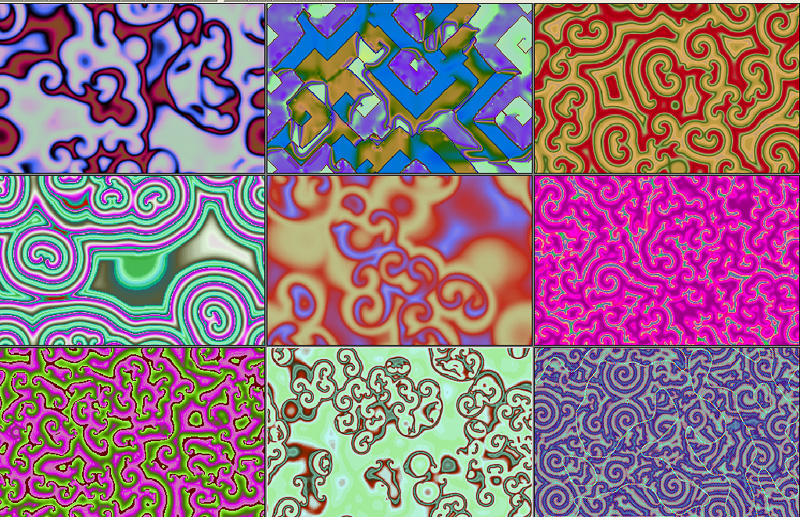

As an example of such a process, think about Zhabotinsky scrolls, which are moving patterns generated, for instance, by cellular automata, by reaction-diffusion chemical reactions, and by fluid dynamics. When you swirl milk into coffee, the paired vortices are Zhabotinsky scrolls. Mushroom caps and smoke rings are Zhabotinsky scrolls. Fetuses and germinating seeds are Zhabotinsky scrolls.

There are particular kinds of shapes and processes that nature likes to create—some are familiar, and some not. We’re talking about forms that recur over and over again, in all sorts of contexts. Ellipsoids, ferns, puddles, clouds, scrolls, and … minds? It could well be that mind-like behavior emerges very widely and naturally, with no effort at all—like whirlpools in a flowing stream.

AI workers used to imagine that the mind is like some big-ass flow-chart Boolean-valued logic-gate construct. But that’s just completely off base. The mind is smeared, analog, wet, jiggly, tangled. And no Lego block model is ever going to come close to the delicious is-ness of being alive.

It’s the illogical, incalculable, unanalyzable nature of the artificially-evolved neural-net tangles of ChatGPT-style Ais that gives them a chance. Harking back to Kurt Gödel again: When it comes to AI, if you can understand it, it’s wrong.

John: If AI’s do a lot of the creative work for us, will it be genuinely creative?

Rudy: Right now writers and artists are sweating it. Most intelligent and creative people suffer from imposter syndrome. Like, I have no talent. I’ve been faking it for my whole life. I can’t write and I can’t paint. They’re going wise up to me any day. A cheap-ass program in the cloud can do whatever I do. But meanwhile, what the hell, I might as well keep going. Maybe I can sell my stuff if I tell people that a bot made it.

But for now it seems like the prose and art by ChatGPT is obvious, cheesy, and even lamentable. Generally you wouldn’t mistake these results for real writing and real art. Especially if you’re a writer or an artist. But the big question still looms. How soon will ChatGPT-style programs outstrip us?

Maybe I’m foolish and vain, but I think it’ll be a long time. We underestimate ourselves. You’re an analog computation updated at max flop rates for decades. And boosted by being embedded in human society. A node in a millennia-old planet-spanning hive-mind.

Can bot fiction be as good as mine? Not happening soon.

John: Can an AI be conscious?

Rudy: Yes. Here’s how to emulate a simple version of consciousness, using a technique originally described by neurologist Antonio Damasio in the 1990s.

The AI constructs an ongoing mental movie that includes an iconic image of itself plus images of some objects in the world. It notes its interactions with the objects, and it rates these interactions. These ratings are feelings. And now suppose the AI has a second-order image of itself having these feelings. This is consciousness—the process of watching yourself have feelings about the world around you.

Some researchers feel that AIs need to have bodies in order to achieve true consciousness. Theological analogy: God can’t understand humanity until manifesting self as a human avatar. Sounds familiar…

I delved into the issue of bodies for AIs in my recent novel Juicy Ghosts. In my novel, people achieve software immortality by having emulations of them stored in the cloud. As I mentioned before, I call these emulations lifeboxes.

And the idea now is, as I say, that a lifebox should be linked to a physical meat body. Your personality comes not only from your software, but from your full body: the sense organs, the emotional flows, the lusts and hungers and fears. And from whatever mysterious quantum-computational processes are found in a meat body. You might use a pre-grown clone of your dead self, or you might parasitize or possess another person or even an animal. Main thing is that you need the analog, quantum-jiggling meat. Once you’ve got that, you’re not just a ghost—you’re a juicy ghost.

John: Can you fold in a happy memory of our early cyberpunk days together, Rudy?

Rudy: My favorite is when John and I went to a 1985 SF con in Austin. We stayed at Bruce Sterling’s apartment, maybe with Charles Platt and Lew Shiner. We were in town for a panel, as described in the “Cyberpunk” chpater of my autobio Nested Scrolls. The panel also included Pat Cadigan and Greg Bear. William Gibson couldn’t make it. Bruce joked that Bill was in Switzerland with Keith Richards getting his blood changed. I’d somehow rented a Lincoln—Hunter Thompson style—and one evening we were slow-rolling along an Austin main drag and John was sitting shotgun, and he leaned out of the window and repeatedly scream-drawled this phrase at the local burghers: “Y’all ever ate any liiive brains?” The good old days. Did they really happen?

John: They did. Juicy Ghosts is the most recent book I’ve read by you. What are you working on now?

Rudy: I’m not writing much these days. I’m in a strange state of mind. My dear wife Sylvia died four months ago. It might be okay to end my career with Juicy Ghosts, , one the very best books I ever wrote. It has amazing near-future tech, great characters, a wiggly plot, and some heavy revolutionary content. And I fucking had to self-publish it. If you’re curious about the process, you can a browse my Juicy Ghosts writing notes. Another brick in the great wall of Rudy’s Lifebox.

I feel like I’m morphing into a painter. I took up painting in 1999 while writing my historical novel As Above, So Below about the Flemish master Peter Bruegel the Elder. I wanted to see how painting felt, and I quickly came to love it.

I enjoy the exploratory and non-digital nature of painting, and the luscious mixing of the colors. Sometimes I have a specific scenario in mind. Other times I don’t think very much about what I’m doing. I just paint and see what comes out. Working on a painting has a mindless quality that I like. The words go away, and my head is empty. And I can finish a painting in less than a week.

I’ve done about 250 paintings by now, and I’m steadily getting better. I like making them, and I’m doing okay with selling them through Rudy Rucker Paintings.

Who knows, maybe that’s my new career.

John: You’re a direct descendant of the philosopher Georg Hegel. Do you have an overall philosophy? I have a sense from many of your writings that you do, especially the later books of the Ware Tetralogy.

Rudy: I see the universe as being a single, living being. All is One. Gnarly and synchronistic, with everything connected. Like a giant dream that dreams itself. Or like the ultimate novel, except that it wrote itself, or is writing itself, hither and yon, from past to future, all times at once. This production’s got the budget, baby.

The meaning? Life, beauty and love.

For details, check out the last section of my tome, The Lifebox, the Seashell, and the Soul.

As I said, most of the material in this blog post is drawn from John Shirley’s email interview with me for Brock Hinzmann’s new ezine Instant Future . Check it out.

And thanks for the interview, John. These were good questions.

Y’all ever ate any liiive brains?